“All models are wrong, some are useful”, George Box

Attributed to a British statistician, this phrase is still being quoted almost 50 years later and has likely inspired aphorisms like “perfect is the enemy of good” and “80-20 rule” and “Better a diamond with a flaw than a pebble without”, Confucius?

Following up on that, perhaps just due to fear of publishing invalidated opinions still lingering from university, there are multiple examples accepting things as fact despite corner cases disproving the hypothesis or being so obscure no one previously considered them. We can speculate that no one ever pondered “what would happen if two black holes meet somewhere in the universe and decide to combine into one and we recorded it” but on September 14th, 2015, this exact scenario did occur and confirmed Einstein’s theory on gravitational waves beyond refute. Ironically even Einstein himself wasn’t that confident in his theory and would periodically change his position on the matter, yet anyone using GPS has benefited from his understanding of the time/space relationship – flaws an all. So what does any of this have to do with security risk management in three words or less?

Strategic Business Alignment

One of my regular questions on the podcasts is how our guests help their clients identify the point of diminishing returns. Primarily due to my personal tendency to identify the handful of scenarios where a solution may not be effective, and I could use some ideas on accepting good versus the costly pursuit of perfection. Revisiting “strategic business alignment”, as risk professionals we need to accept that organizations often embark down a path without a complete understanding of how they will handle everything that comes up. The concept of minimum viable product was a Silicon Valley darling for years, can partially inform the risk assessment model. The MVP model could be cynically described as: get people using your software, fix things that really are an issue after enough customers report them rather than agonize over every possible use case, and hopefully don’t run out of money before becoming profitable. The Agile Alliance does point out that settling for just enough that people will buy something doesn’t make a product viable in the long run and we see see that regularly with cracks in the cloud infrastructure, financial meltdowns an so on.

Back to “all models are wrong”, if we take the MVP concept seriously, part of our effort will be to analyze why things are not going as planned, ideally looking for the root cause rather than applying duct tape and pushing on with the next release or growth initiative just to keep things on schedule. Schedules are important, but the courage to miss a date for safety, quality, or some other darn good reason will be rewarded in the long run.

I love deadlines. I like whooshing sound they make as they fly by. Douglas Adams

I would be the first to point out that a business productivity application flaw will have far less significant impact on society than a flaw in water treatment, electrical generation or a piece of medical equipment but can we address flawed models via resilience? As risk professionals we often possess the uncanny ability to identify one or two scenarios that an existing or proposed control will fail to address. At this point we have the choice of saying “this is unacceptable because …” or we can ask those that may no more about some aspects of the problem or the organziations capability to respond – appearantly even Einstein had his doubts and would speak with others in his field –.

Posing a question like “if scenario one came to pass, what is the most credible and most extreme impact?” in a roomful of subject matter experts will most likely result in numerous lengthy responses, some contradictory, but themes tend to emerge. Most certainly watch for those extremes, I had dinner recently with a respected ICS security expert and completely agree with the position that some outcomes, no matter how unlikely, are too significant to knowingly leave to chance. If we as risk professionals identify such a scenario I believe we should resist “damn the torpedoes” with everything we have if professional ethics mean anything. That said, in most cases, the worst possible outcome may be highly undesirable but recoverable. There is a generational impact level difference between a cardboard box for a C-Suite member and a nuclear wasteland or polluted water.

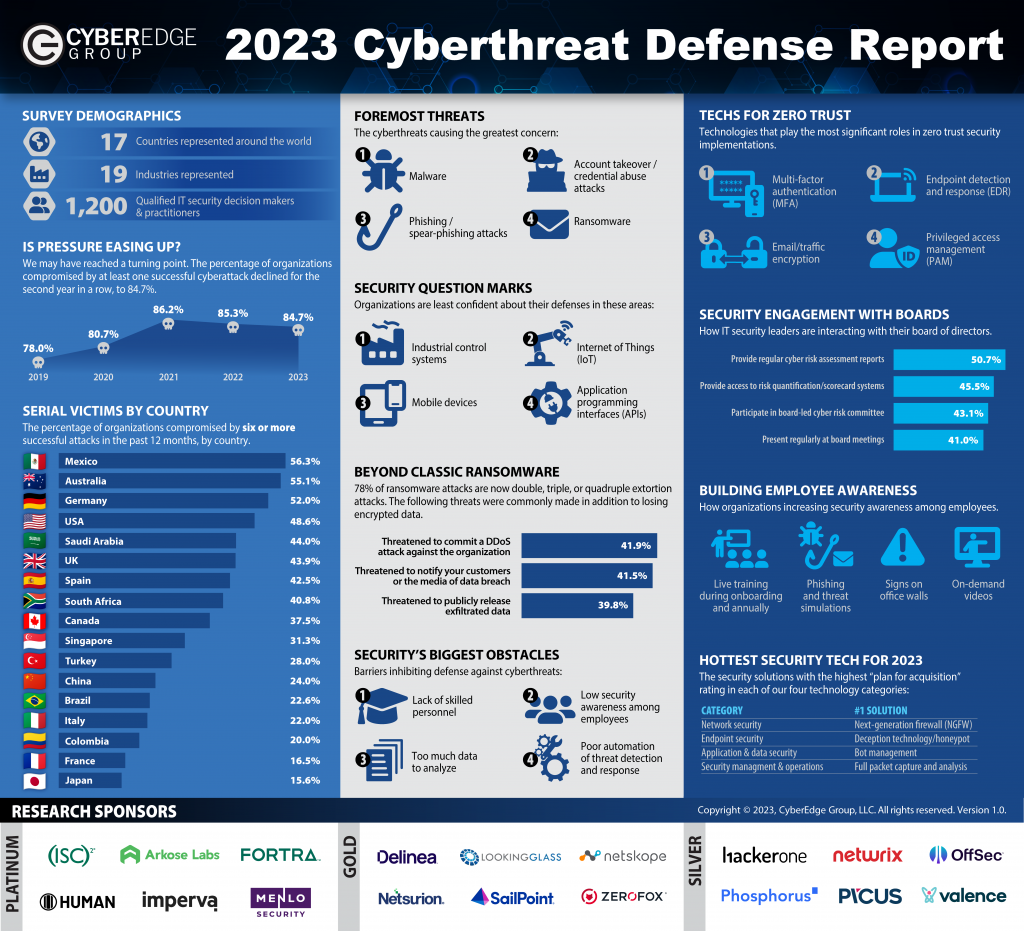

Many corporate boards list “cyber security risk” in their top 5, and reviewing a firewall or application security log for five minutes will confirm the threat is very real. That said, I know of no business that has decided to shut everything down because “things are just to challenging these days“, ironically many are openly evaluating if machine learning, process automation and cloud computing can give them marketplace advantage.

In a business world where many run toward the fire instead of from it, can we help those we serve balance the many enterprise risks, not just cyber, to give them the greatest likelyhood of a successful outcome? Tim recently recommended a book on becoming a trusted advisor, which includes a great deal of discussion on dealing with mistakes. Theoretically solving for all corner cases and missing the opportunity window ultimately doesn’t serve anyone.